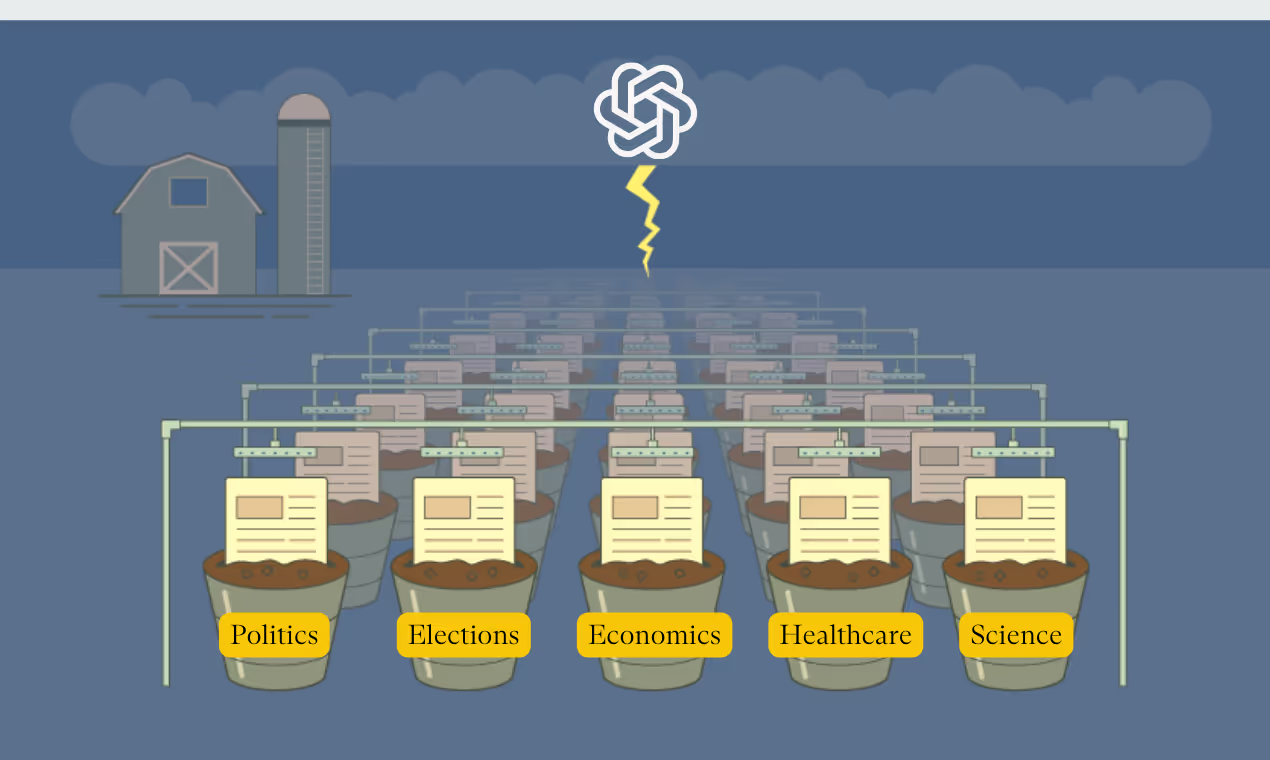

AI-driven tools have galvanised a surge in the creation of AI generated news websites. These websites are often referred to as "Content Farms."

Designed with SEO-friendly templates, they churn out vast quantities of content at unprecedented speed and minimal cost all to get as many users onto its pages as possible.

While this may seem like a major boost for information dissemination, it poses significant challenges to online information verification and integrity.

Especially when it comes to dealing with topics of politics and high-profile social issues where credible information is required.

How are these sites made?

Content farms predominantly relied on human labor in the past which meant they were limited in how they could produce in a short time.

These websites are made using a template that is compatible with search engines like Google or Bing. When these templates are paired with content that is also SEO compatible, then these websites make their mark known on the internet.

But with the growing availability of generative AI tools, anyone can make a website that can produce thousands of pieces of content a day as long as they have a website that has an SEO-friendly structure.

They often do this without regard for the accuracy or quality of the content that is published on these websites.

So one user could, hypothetically, just use ChatGPT to generate articles en masse on various topics. Topics like politics, economics, and healthcare for example.

This process is so accessible that you can even watch short step-by-step YouTube tutorials to make these websites fully operational.

What’s even more worrying is that there is even a freelancer market that you can use that prepares, personalises, and launches all within 48 hours. Often for less than $100 (SOURCE).

Making content farms has never been easier with the emergence of generative AI tools.

So why should we be concerned about this?

There are many reasons as to why this is extremely concerning but let’s look at two for now:

1. Hallucination Rates

The sheer volume and pace of content production facilitated by AI pushes the prevalence of misinformation way up. This is due to what we have previously discussed in which AI tools like ChatGPT hallucinate a large proportion of the time (SOURCE).

Content farms, particularly those focusing on current events, contribute to a surge in "hallucinations". These are inaccurate news stories presented as factual information as a result.

This rampant production of misinformation undermines public trust and only perpetuates more aggressive discourse about social and political issues online.

2. Agenda Concerns

AI-generated content is entirely built on a series of specific prompts inputted by its users. If such a user were to have dubious intentions for the content it wants to produce, then generative AI tools will help bring those intentions to life.

With this in mind, content farms can be specifically leveraged to propagate biased or deliberately push false narratives about certain topics.

For instance, the consistency in the amount of disinformation surrounding elections is posing a grave threat to electoral integrity. A content farm can produce thousands of pieces of content a day directing users towards a specific viewpoint with polarising and divisive language.

These pieces of content can even facilitate disinformation campaigns and these campaigns are already starting to affect politics now (SOURCE).

These websites' availability and ease of use will only exacerbate the issue of disinformation overloading search engines like Google and Bing.

But aren’t these actions illegal?

The legality of AI-generated content and copyright is still quite complicated and unclear. Legal experts are still ironing out copyright infringement and its relationship with AI.

In the instance of freelancing platforms, they use this ambiguity to circumvent accountability for AI models utilising existing pieces of work, by making the freelancers state that the content being produced is not participating in copyright infringement due to it being transformative.

Also, the anonymous nature of these websites and their lack of discernable differences compared to other news websites makes it very difficult to crack down on them pushing factually inaccurate content.

The exponential growth of content farms necessitates robust tools for discerning factual accuracy amidst the vast sea of misinformation.

The best way to stop these websites before they are widespread is to identify factually correct and incorrect content.

As stated previously, these websites are not making content to be factual or credible. This means that the generative AI tools are most likely hallucinating and producing content with no credible sources.

The factually inaccurate content is the weakness.

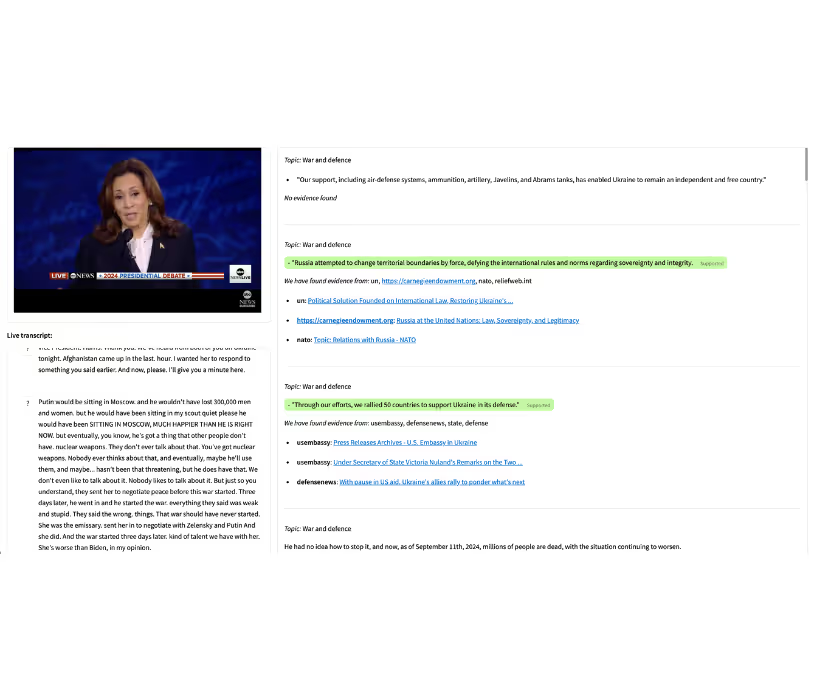

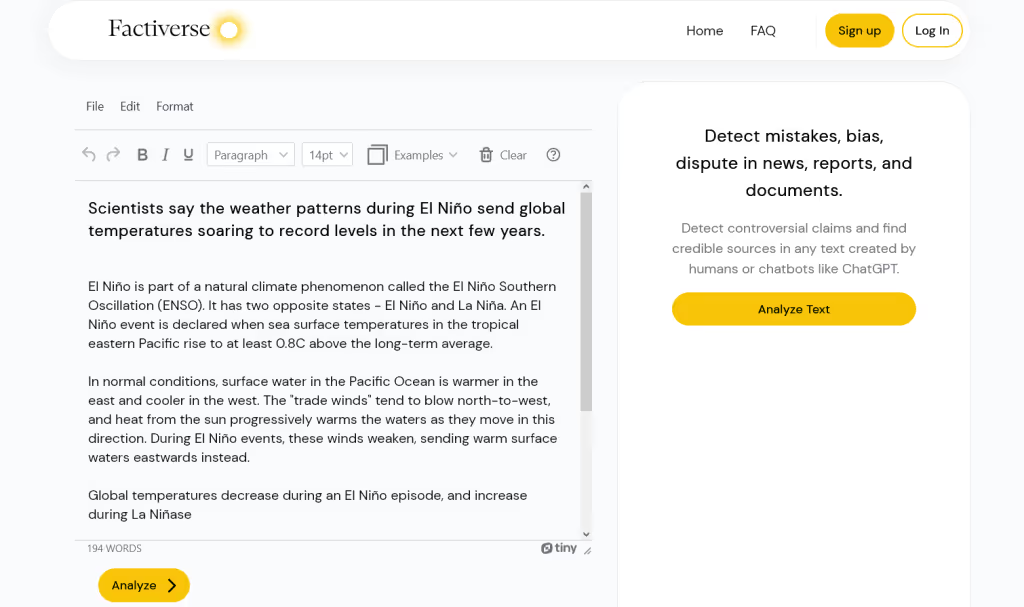

Factiverse's AI Editor is the best tool to highlight that weakness as clear as day. The Factiverse AI Editor can identify factual inaccuracies within textual content.

Firstly it identifies the claims located within a specific piece of text or content piece. Then it highlights how much those claims are either disputed or supported.

With this tool, Factiverse presents a real solution for identifying factually inaccurate content before it becomes too widespread.

It also empowers users to navigate the digital landscape with greater discernment and confidence.

Want to try out the Factiverse AI Editor?

Click here to find out more: https://factiverse.ai/

References: