.avif)

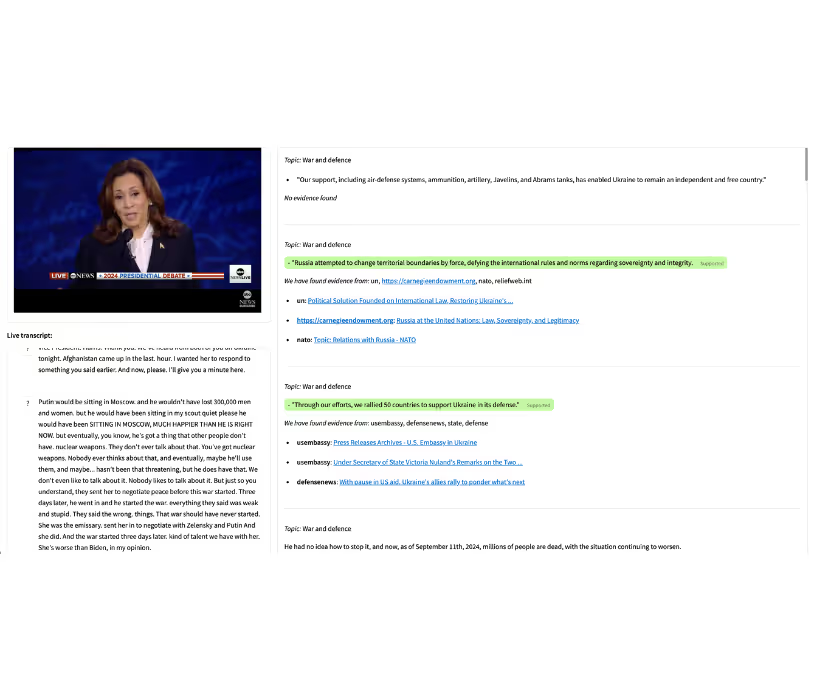

How do you make sense of it all in a world overflowing with information?

Misinformation spreads faster than the truth. Social media platforms and generative AI are amplifying false information at a scale that is unprecedented in all of human history.

We struggle to find reliable methods to accurately obtain the knowledge we need from the 1.5 billion pages indexed on Google for example (SOURCE)

We are being overwhelmed by the sheer amount of information at our disposal.

When we feel overwhelmed, is where Factiverse steps in.

Using advanced information extraction technology, Factiverse is designed to scan and analyze vast portions of the internet, identifying and verifying factual claims across billions (and eventually trillions) of online sources.

But how does it actually scan the web in the first place? Here’s a behind-the-scenes look at how Factiverse collects and prepares content from online sources for fact-checking broken down into 5 essential steps.

Step 1: Prioritize What to Scan

The internet is massive. Scanning every page equally just isn’t efficient and incomprehensibly complex. That’s why Factiverse starts by prioritizing high-value sources.

Think of trusted news outlets, government databases, and academic journals.

Factiverse uses specialized algorithms that utilize credibility scores, domain authority, and topic relevance to decide what sources have the most desirable credibility on a specific topic. This includes IFCN scores as well as selections informed by The Reuters Trust Scoring system. This helps focus our resources and tools on the places where the facts and accurate information on specific topics are likely to be.

Step 2: Crawl the Web Automatically

So once the priority list is set, web crawling takes place.

This process involves navigating the internet in order to collect massive volumes of content, including articles, blog posts, and other data.

The web crawling process is constantly scanning both new and updated pages at high speed to ensure Factiverse always works with the freshest information.

Step 3: Clean and Filter the Content

Raw web data is messy. Pages are filled with distractions. Things like popups and menus are sometimes repeated or there are even pages with duplicate content.

To make the data usable, Factiverse filters out all the noise.

It extracts the main body of text, removing irrelevant elements so that only the core content. It obtains the actual article or post and this is used for the analysis. This streamlining is essential for fast, accurate processing.

Step 4: Detect Language and Structure

Once cleaned, each page is analyzed to detect its language, content type, and structural elements. It aims to extract information like:

- Title of the source

- Author of the source

- Publish date of the source

- Labels associated with the source

- Claims detected within that source

- Verdicts of the sources

Factiverse then breaks the content into sentences and paragraphs. Allowing it to label the parts of the text that are likely to contain controversial claims or statements.

More information about this specific process can be found by reading this peer reviewed scientific paper made by co-founder Dr Vinay Setty.

This step ensures the system understands the format and context of what it’s reading.

Step 5: Extract Claims and Key Info

Factiverse uses Natural Language Processing (NLP) and site parsing to pull out the most important parts: factual claims, named entities (like people, places, and numbers), and the relationships between them.

This structured information becomes the input for deeper analysis such as checking those claims against reliable databases, identifying contradictions, or gauging the credibility of supporting sources.

Conclusion

Scanning a billion web pages might sound impossible. But with smart prioritization and powerful crawling tools, Factiverse is making it doable for companies and individuals to extract the information they need into key digestible insights.

These five steps form the foundation for everything that comes next: detecting misinformation, validating truth, and helping the internet become a more trustworthy place.

Sources:

- Internet Live Stats https://www.internetlivestats.com/total-number-of-websites/

- Factiverse Peer Reviewed Research Paper: https://arxiv.org/pdf/2402.12147

.avif)