Health misinformation isn’t new. For centuries, false cures, miracle tonics, and medical myths have circulated through communities. Sometimes they're passed along with good intentions while other times driven by profit or ideology.

What is new is the speed, reach, and precision with which misinformation spreads in the digital era.

Social media platforms, encrypted messaging apps, and even AI-generated content have supercharged the problem (SOURCE). In the time it takes to fact-check a single viral post, hundreds more pieces of misleading content may already be in circulation.

Every week, there continues to be more “miracle cancer cure” making the rounds or a brand new conspiracy theory about vaccines.

The consequences are not just theoretical. They are extremely deadly.

The long history of dubious cures

Before modern science, communities relied on word of mouth, folk remedies, and charismatic healers to address illness. Without a systematic way to test claims, it was easy for unproven treatments to take root.

From “snake oil” salesmen in the 19th century to miracle cure pamphlets in the 20th, misinformation has always been a persistent public health threat (SOURCE).

Even as the scientific method gave us rigorous research and evidence-based medicine, skepticism of scientific consensus has persisted unfortunately. In the 1990s, one of the most notorious examples came into the forefront of this conversation and kickstarted modern day vaccine conspiracy theories. A now retracted study falsely claimed a link between the MMR (measles, mumps, rubella) vaccine and autism (SOURCE) .

The kicker is that further investigation revealed the research was fraudulent but unfortunately the damage was done .

Vaccine hesitancy surged, and the legacy of that misinformation still lingers today.

Why modern health misinformation is more dangerous

Disagreements in opinion happen all the time, and in many areas of life, they cause minimal harm. Health misinformation is different. Here, false narratives can directly influence life and death decisions.

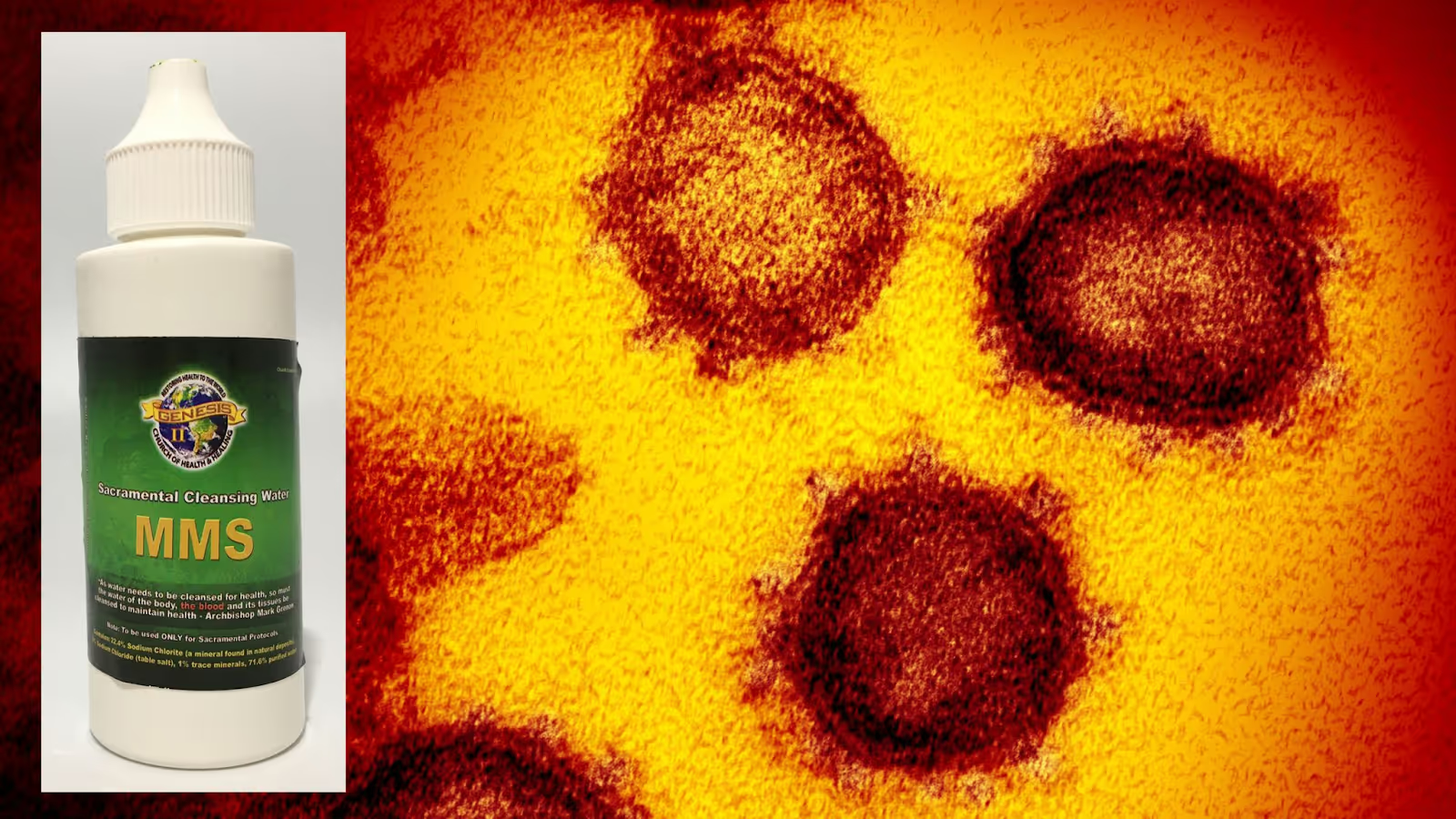

Consider these real world cases:

- Miracle Mineral Supplement (MMS) – Promoted online as a cure for COVID-19 and other illnesses, MMS is essentially industrial bleach (sodium chlorite). Ingesting it has caused severe vomiting, acute liver failure, and even death in multiple countries. (SOURCE)

- Methanol poisoning in Iran (2020) – Social media rumors claimed that drinking industrial methanol could cure or prevent COVID-19. The result: over 5,000 poisonings and hundreds of deaths within just a few months. (SOURCE)

- Black salve for skin cancer – Marketed as a “natural” alternative to surgery, this corrosive paste destroys skin tissue indiscriminately. Patients who used it instead of seeking proven medical care have suffered severe disfigurement and tumor spread. (SOURCE)

These aren’t fringe cases. A half hearted look through the web will bring you a slew of cases just as similar. What they are is a glimpse at the real-world fallout of letting dangerous health claims spread unchecked.

But what is the most concerning aspect of modern health misinformation is the access to a wider audience it has through the internet and social media.

The challenge of neutralizing health misinformation

Public health agencies know that accurate communication being delivered efficiently is essential. But in reality, they’re playing a constant game of catch-up.

Misinformation often starts in closed or semi closed spaces: private WhatsApp groups, encrypted messaging channels, niche podcasts, or livestreams.

By the time a harmful claim surfaces on a large public platform, it is likely that it has already reached millions in less visible online spaces. Compounding the issue, false health claims are rarely vague. They’re often specific, actionable, and urgent sounding.

This has the habit of making them more persuasive and simplistic than nuanced scientific advice. Think about how viral posts frame their claims for example, “Drink this today to prevent cancer” rather than “Clinical trials show mixed results over years of study.”

The lack of context creates an urgency gap that this type of misinformation exploits on a consistent basis.

How AI can help detect and neutralize misinformation faster

To truly address health misinformation, we need to understand the narrative before it becomes a public health crisis. That means:

- Monitoring in real time – Monitor not just text posts, but also video, audio, and image-based content across multiple platforms. Many harmful health claims emerge first in these formats.

- Extracting key claims quickly – It’s not enough to know misinformation is circulating, you need to know exactly what’s being said so you can respond with precision.

- Responding with targeted, evidence-based communication – The most effective counter-messaging directly addresses the false claim, explains why it’s wrong, and offers credible alternatives.

This is where new AI tools offered by companies like Factiverse can make the difference.

Factiverse technology can analyze vast amounts of online content in real time, flag emerging narratives, and verify claims against trusted medical and scientific sources.

Instead of waiting for misinformation to become viral, health authorities can see it forming, understand the context, and craft rapid responses that prevent harm before it takes place.

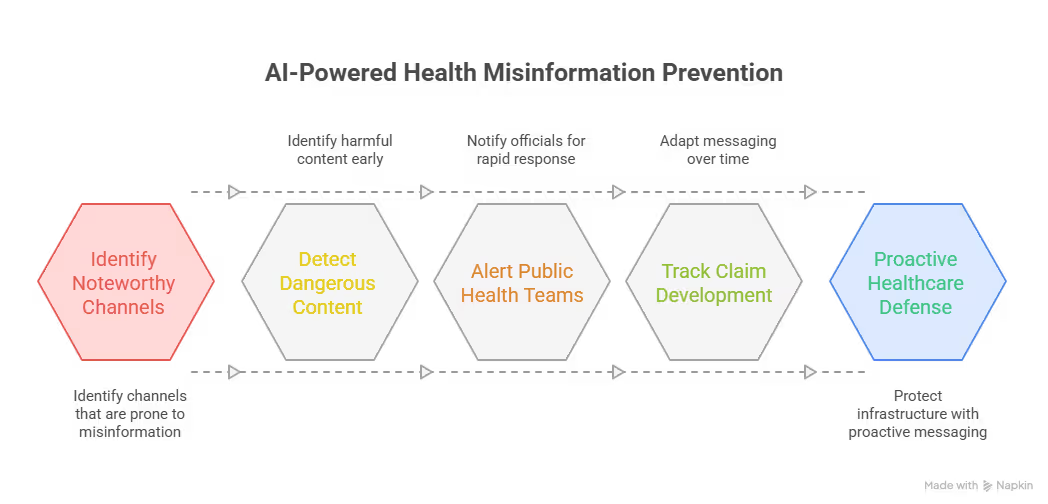

Moving from reaction to prevention

Let’s take a few common examples of how AI can enable a shift to prevention. Imagine being able to:

- Detect a dangerous “home remedy” video in a small online community on YouTube before it’s shared widely.

- Alert public health official teams immediately, giving them time to prepare a clear, fact based rebuttal.

- Monitor how a false claim develops over time and adapts messaging accordingly.

This level of insight and speed transforms how misinformation can be managed. It turns the fight from damage control into a proactive defense for key healthcare infrastructure.

The bottom line

Health misinformation isn’t just a matter of “different opinions.” It’s a public health threat with real-world consequences: hospitalizations, poisonings, and preventable deaths.The digital age has amplified both the scale of the problem and the urgency of the solution.

Neutralizing it requires more than good intentions. It requires the ability to see harmful narratives as they emerge, understand their spread, and respond with clarity and authority fast.

With AI tools like Factiverse, health authorities, journalists, and fact-checkers can move from chasing down falsehoods to stopping them before they spiral out of control.

Because when the stakes are measured in lives, speed and accuracy aren’t optional, they're essential.

Source List:

- University of Luxembourg - “Analysing Users' Perceptions of Health Misinformation on Social Media” - Read more

- NPR - "A History of Snake-Oil Salesmen" - Read more

- HSE (Ireland) - "Measles, mumps and rubella" - Read more

- TGA (Australia) - Miracle Mineral Solution (MMS)" - Read more

- A syndemic of COVID-19 and methanol poisoning in Iran - Read more

- Australian Journal of General Practice - Black salve in a nutshell - Read more

.avif)