Elections are under attack.

Recent investigations in Norway found that anonymous groups spent over 300K coordinating the dissemination of false information pertaining to the upcoming general elections on Meta platforms (SOURCE).

While this is a worrying incident, it is part of a global trend if you take into account that similar tactics were employed in other democratic countries.

The impact of these actions is evermore worrisome by recent findings that young people in the EU use social media as their primary news source (SOURCE). This is a concern for many who worry since they are using these platforms, they are more open to being manipulated to polarizing viewpoints.

And since the narrative on these platforms can be easily controlled, it becomes feasible that election outcomes can be influenced as well. To show the gravity of the problem let’s look at how foreign interference is being done to disrupt elections across the world.

Election inference cases

United States (2024-2025)

The US is no stranger to efforts to disrupt their electoral processes. Russia, Iran, and China engaged in extensive disinformation campaigns which included creating fake bomb threats at polling places and hiring right-wing influencers to spread pro-Kremlin messaging. The goal of these efforts were to undermine public confidence and polarise voters to influence swing states. (SOURCE).

Germany (2025)

During the German parliamentary elections, there was a Russia-backed disinformation campaign that flooded social media supporting the far-right parties using fabricated polls and deepfakes. It was noted that key influencers and troll farms amplified divisive content meant to polarize the electorate (SOURCE).

France (2024-2025)

During the French parliamentary elections, Russian actors launched coordinated cyber and disinformation attacks targeting election debates and democratic trust. This included creating a narrative of interference by French authorities during these democratic processes (SOURCE).

Romania (2024)

A notable example from last year was that the entire Romanian presidential election was annulled due to Russian-backed campaigns. They employed a variety of tactics like cyberattacks and disinformation campaigns that favored pro-Russian candidates including TikTok bots that amplified falsehoods (SOURCE).

.png)

Poland (2025)

In Poland there was an unprecedented Russian disinformation using thousands of fake accounts on social media platforms. The content produced was aimed to polarise and destabilise the presidential race with anti-Western propaganda (SOURCE).

Taiwan (2024)

Taiwan is no stranger to interference from China. Taiwanese state agencies conducted decentralized disinformation campaigns via social media to sway the presidential election, using platforms like TikTok and spreading pro-China narratives (SOURCE).

Japan (2025)

Russia was suspected of manipulating information through social media during Japan's 2025 Upper House election campaign. Its suspected method was that of spreading anti-government propaganda via bot accounts that boosted the popularity of the far-right Sanseito party (SOURCE) .

Philippines (2025)

The Philippine president launched a probe into allegations of Chinese state-backed troll farms spreading disinformation across various social media platforms to influence elections. A top National Security Council official told the country’s senate that signs of Chinese information operations had been detected on these platforms (SOURCE).

Bangladesh (2024)

For Bangladesh it was found that there was coordinated misinformation and fake expert content circulated on platforms like Facebook amid the election year. It became so serious that the government began using the Digital Security Act for online speech control to mitigate the risks of election misinformation (SOURCE).

.png)

Exposing platform weaknesses

A question that gets repeated at nauseam when it comes to this issue is “how does this keep happening?”

The answer usually ends up being that it has to do with social media companies and their limited safeguards. Their flawed practices with its enforcement policies are proving detrimental to democratic processes. While they claim to protect users from malicious and polarizing content, in practice their safeguards are porous or inconsistently applied.

It can be clear as day when you look at the recent actions made by social media outlets recently. According to the Norwegian Media Authority, these social media platforms have rejected videos and removed links regarding source awareness and disinformation ahead of the general election in Norway.

.png)

Citing that the content that they were aiming to promote was “political” in nature which can be interpreted as polarising or divisive. The purpose of these links and videos was to serve as an information campaign for young people's ability to form independent opinions based on facts and not distortions.

This inconsistency on what is allowed or what is not allowed to be promoted on platforms presents a tangible risk for the information environment in the lead up to an election.

A risk that is already being exploited by many who seek to take advantage.

What’s needed now is stronger safeguards

What’s becoming more clear about social media platforms and their relationship to democratic processes is that it needs more safeguards. Processes that require reliable information flows between state bodies and the general public.

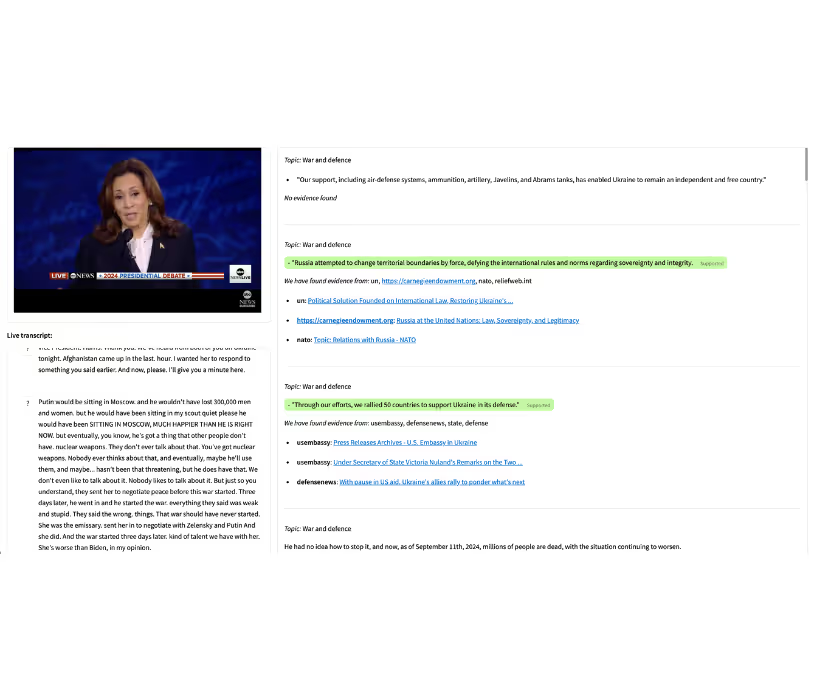

There is also a sufficient lack of monitoring/filters and the current ones are proving insufficient at best. Processes like monitoring, claim detection and source validation are increasingly becoming more central to ensure that democracy will maintain its integrity during its most fundamental function.

It stands to reason that AI-based tools will increasingly serve as part of the essential infrastructure for democracy. We need to start thinking of them as digital seatbelts for democracy.

But the implementation of these tools cannot be done haphazardly. A core principle of these tools is to be transparent, this is especially needed when using these tools for moderation and fact-checking.

.png)

Examples of stronger safeguards

Platforms should implement stricter and more transparent content moderation policies

TikTok and Meta have been under scrutiny for their lack of inconsistency when it comes to moderating political content and for good reason. Blocking state run organizations from providing necessary information about the electoral process but allowing anonymous organizations to proliferate false information should not be the norm.

Social Media users need better digital literacy tools to evaluate claims critically

What’s becoming more clear as time goes on is that there needs to be more digital literacy skills being pushed to social media users. Without a baseline of skills to discern what can be a distortion of the truth leaves room for them to be manipulated by malicious actors. Examples of this can be seen in Ukraine where there is said to be a high resistance to disinformation efforts.

Policymakers should set baseline requirements to protect democratic discourse online

Institutional intervention is a key factor in pushing private entities to enforce meaningful change that protects the stakeholders that it serves. Without institutional change like conducting real time monitoring efforts of social media during electoral processes, governments are stuck being reactive rather than proactive when it comes to pushing out effective messaging. This can be done through a wide variety of AI tools like Factiverse, Logically and others.

Democracy is not just elections anymore

The idea that democracy is just a series of elections is rapidly becoming an idea that does not reflect the modern world that we are in. Modern democracy demands a lot more in the digital age where the information environment that these elections take place in needs to be protected.

It is both up to government institutions and social media companies to do that now.

Sources:

- Euronews – Young Europeans face rising threat from misinformation as social media becomes main news source – Read more

- NPR – 2024 election: U.S. officials cite foreign influence from Russia, China, Iran – Read more

- Council of Europe – Cyber interference and democracy: Council of Europe actions and responses (2025) – Read more

- L’Express – Exclusif: Les 13 agressions de Poutine en France – l’effrayante note des services secrets – Read more

- Politico Europe – High early turnout as Romanians vote in critical election – Read more

- Forbes – Poland’s presidential election campaign faced unprecedented Russian interference, officials say – Read more

- Finansavisen – Skremmende synes Vestre om anonym trollkonto som sponser virale videoer i valgkampen – Read more

- The Guardian – Taiwan presidential election: China steps up influence campaign – Read more

- Japan Times – Russia accused of election interference in Japan in 2025 – Read more

- Reuters – Philippine president orders probe into alleged foreign interference in elections – Read more

- Financial Times – How platforms and politics shaped European elections in 2025 – Read more

.avif)